Robots.txt in SEO – The Silent Commander of Web Traffic !

In the expansive world of the internet, the significance of Robots.txt in SEO in directing search engine traffic cannot be overstated.

This article aims to unbox the intricacies of Robots.txt, shedding light on its importance, functionality, and impact on search engine rankings.

Whether you’re a seasoned webmaster or a curious newcomer, join us on this journey to demystify the art of directing search engine traffic.

To understand the techniques to rank using SEO please follow my blog and do let me know if it suits your interest.

Understanding the robots.txt File

Deciphering the Essence of Robots.txt in SEO

Robots.txt in SEO, serves as the silent architect behind website accessibility for search engines. This section peels back the layers, explaining the purpose and importance of this vital file.

Understanding the need of Robots.txt in SEO helps us to understand the fundamental that directs search engine traffic as we explore how this uncomplicated text file guides search engine bots through the intricacies of your website.

Analyzing the Elements

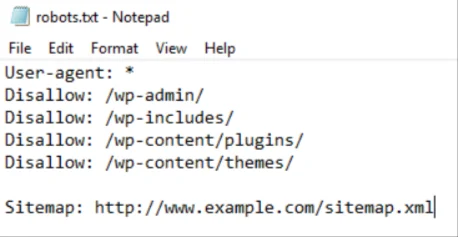

- User-agent: Different search engines or web crawlers may interpret instructions differently. The user-agent directive allows you to specify which crawler you are addressing in the robots.txt file.

for example

User-agent: Googlebot

The above instruction is only for Googlebot to crawl. - Disallow: This directive informs the search engine not to crawl specific parts of your site.

For instance, if you want to exclude a directory named “private,” you would use:

Disallow: /private/ - Allow: Conversely, you can use the allow directive to permit crawling of specific areas within a disallowed section.

for instance:

Allow: /public/ - Sitemap: You can include the location of your XML sitemap in the robots.txt file. This helps search engines discover and index your important pages efficiently.

For example:

Sitemap: https://www.yourwebsite.com/sitemap.xml

The Necessity of robots.txt in SEO

- Control Search Engine Crawling: Robots.txt file guides search engine crawlers, allowing you to dictate which website sections are crawled and indexed, giving you control over your online visibility.

- Preserve Bandwidth: By directing crawlers away from resource-intensive areas, robots.txt helps conserve bandwidth, ensuring smoother website performance for visitors.

- Protect Sensitive Content: Robots.txt in SEO is used to block crawlers from sensitive directories, safeguarding confidential information and maintaining privacy.

- Prevent Indexation of Duplicate Content: Robots.txt helps avoid search engine penalties by preventing the crawling of duplicate content, improving the overall SEO health of your site.

- Improve SEO Focus: Robots.txt file guide crawlers towards essential content, enhancing SEO performance by ensuring search engines prioritize and rank key pages.

- Facilitate Crawling Efficiency: Including your XML sitemap in robots.txt file streamlines the indexing process, helping search engines discover and crawl your most relevant content efficiently.

- Prevent Unintentional Crawling: During development, robots.txt excludes unfinished pages, ensuring that search engines do not index or display incomplete content in search results.

Best Practices for Managing the robots.txt file

- Create a Comprehensive Robots.txt File: Craft a robots.txt file that accurately reflects the structure of your site. This ensures that search engines receive clear instructions.

- Regularly Update the Robots.txt File: As your website evolves, so should your robots.txt file. Regularly review and update it to reflect changes in your site’s structure or content.

- Test Before Implementation: Before deploying a new robots.txt file, utilize Google’s robots.txt testing tool to ensure it doesn’t inadvertently block important pages.

- Understand the Implications of Disallow: Exercise caution when using the disallow directive, as it can prevent search engines from accessing and indexing vital content.

Avoiding Common Mistakes in Robots.txt Configuration

- Incorrect Syntax: A small syntax error can render your robots.txt file ineffective. Double-check your syntax to avoid unintentional issues.

- Blocking Important Pages: Be mindful not to block pages critical to SEO, such as your homepage, from being crawled.

- Assuming Instant Changes: Search engines may take some time to process changes to your robots.txt file. Be patient and monitor the impact over time.

FAQs: Demystifying the robots.txt File

Is a Robots.txt File Necessary for Every Website?

Yes, a Robots.txt file is essential for all websites looking to control how search engines interact with their content. It allows webmasters to grant or restrict access to specific areas, contributing to a more refined online presence.

How Frequently Should a Robots.txt File Be Updated?

Regular updates are crucial, especially when changes occur on your website. Whenever new content is added or structural modifications are made, ensure your Robots.txt file aligns with these adjustments for optimal search engine indexing.

Can a Robots.txt File Impact SEO Rankings?

Absolutely. A well-optimized Robots.txt file can positively influence SEO rankings by guiding search engine crawlers efficiently. On the flip side, errors in the file can lead to poor visibility and a drop in rankings.

Are There Standard Directives for a Robots.txt File?

While there are standard directives, their implementation depends on the specific needs of your website. Customizing directives allows you to tailor a Robots.txt file to your content and enhance its effectiveness.

How Can I Test the Effectiveness of My Robots.txt File?

Various online tools are available to test and analyze your Robots.txt file. Regularly check for crawl issues and ensure that the file aligns with your SEO strategy for optimal results.

What Happens If a Robots.txt File Is Misconfigured?

Misconfigurations can lead to unintended consequences, such as blocking search engine crawlers from indexing your site. Regularly audit your Robots.txt file to catch and rectify any misconfigurations promptly.

Conclusion:

Understanding how Robots.txt in SEO directs search engine traffic is a dynamic dance between webmasters and search engines.

As you navigate the complexities and impact of Robots.txt in SEO, remember that its proper utilization can catapult your site to new heights in the competitive world of online visibility.